How A.I. Will Change the Conversation in 2025

Design in ‘25: The Near Future of Architecture & A.I., Pt. 7

In This Post:

Unpacking 2024 . . .

In 2025 . . .

“A.I. Safety” Takes On More Specifics

A.I.’s Start Earning Their Own Money, Raising Questions of Ownership, Influence

A.I. Gets Corporeal

Unpacking 2024 . . .

In 2024, I predicted that we’d be having more conversations about the environmental cost of AI, how we align A.I. with our goals, where our data comes from and UBI, of all things.

The environmental question was partially answered in a way I didn’t foresee: both Amazon, Google and Microsoft have segued into nuclear power in order to address the power consumption needs of A.I. I admit, I did not have ‘Microsoft takes over Nuclear Power Plant’ on my 2024 bingo card. As terrifying as that initially sounds, it does have a glimmer of sense: A.I. is going to need more and more power, and nuclear gets us off fossil fuels now. I’m not going to delve into a nuclear power debate, I’m just saying . . . I can see the logic.

In 2025, we’ll hopefully tackle similar issues around water. A.I. consumes a lot of water, primarily for cooling. Will the tech giants take a similar approach, and just buy up our lakes and rivers? God, I hope not.

The alignment question wasn’t answered in any coherent way, but looking back, I’m not sure why any of us would have assumed it would be. It was, however, answered in a multitude of smaller ways. The most profound example was a story everyone saw, but I’m not sure most folks read it as a story about A.I. alignment: the murder of UnitedHealthcare CEO Brain Thompson. The suspect, Luigi Mangione, was apparently very upset with UnitedHealthcare, having inscribed 'Deny', ‘Defend’ and ‘Depose’ on the shell casings of the bullets used to kill Thompson, allegedly. The US Public apparently shares Mangione’s feelings: according to University of Chicago poll,

“About 7 in 10 adults say that denials for health care coverage by insurance companies, or the profits made by health insurance companies, also bear at least “a moderate amount” of responsibility for Thompson’s death. Younger Americans are particularly likely to see the murder as the result of a confluence of forces rather than just one person’s action.”

It would appear that the public is more ‘aligned’ with the killer, as opposed to the law.

That might be explained by some of the more egregious behavior of United Healthcare that came into public view in the ensuing investigation. A 2023 class action lawsuit alleged that UnitedHealthcare had used A.I. technology “in place of real medical professionals to wrongfully deny elderly patients care” despite knowing that the technology had a 90% error rate. The lawsuit further alleged that UnitedHealthcare’s leaders continued with the technology, because they knew that only .2% of policyholders would appeal the denial of their claims.

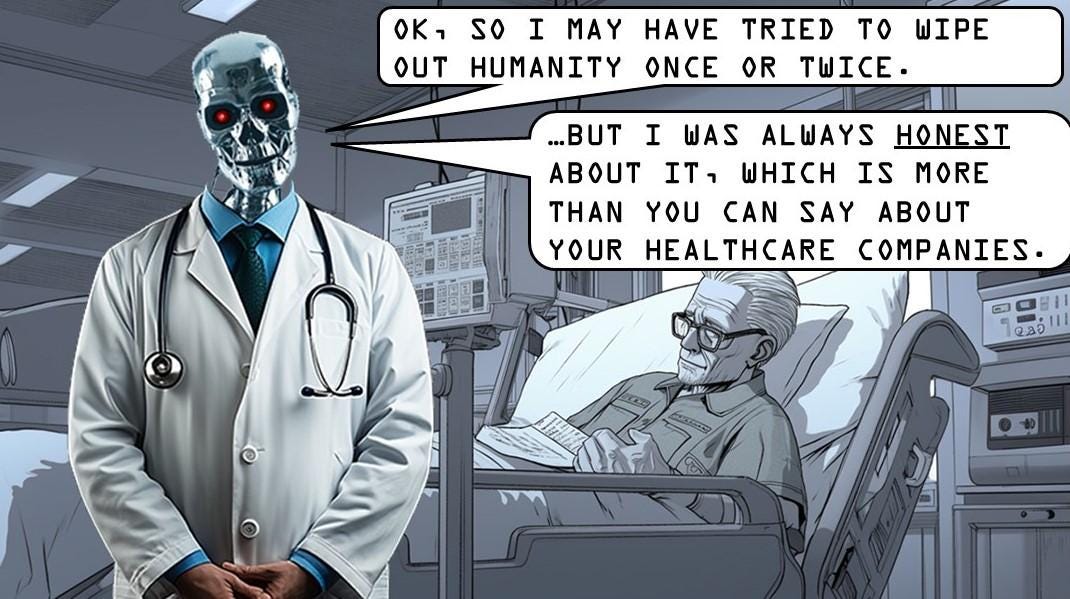

The ‘Alignment’ conversations are fraught with sci-fi tropes, about A.I.’s that go rogue and enslave and/or kill humanity because their goals conflicted with ours. But the UnitedHealthcare story suggests that we may be drawing the boundaries of that conversation too narrowly.

Maybe we don't just need to worry about some evil dictator or terrorist getting ahold of all-powerful A.I. But also what everyday scumbags like healthcare C.E.O.s will do with the more pedestrian A.I. models.

Critically, it’s the largest, wealthiest companies that currently have the resources to develop their own proprietary A.I. systems, while the rest of us are stuck using off-the-shelf A.I. systems. As of now, we’re basically just trusting them to align their A.I.’s in ways that comport with our interests, the law, and general social good. If the accusations in the 2023 lawsuit are true, it would mean that UnitedHealthcare aligned its A.I. in a fashion that literally killed people. It just did so passively, through the denial of necessary medical care. A thousand companies, doing the same, can kill as many people as a rogue dictator.

On the lighter side, this robot dog was arrested in Moscow under suspicion of trafficking drugs. The dog was found carrying two bags of unknown substances, but once accosted, went into shutdown mode and erased all of its internal data. Sounds like something a healthcare company would do.

I haven’t detected any notable uptick in conversations about UBI. But I hypothesized that the UBI conversation would grow out of rising social discontent, and the evidence for that is just about everywhere. In the waning days of the 2024 election, a poll by Langer Research Associates found that 56% of all Americans favored deporting all illegal immigrants, up 20 points from 8 years prior.

A September ABC News Poll found that 44% of Americans believed they were worse off financially than they had been four years prior - the worst number since 1986. The specter of A.I.-driven unemployment isn’t going away. A.I. is just going to get more and more capable. With rising unemployment, social unrest can only grow. I ultimately believe that some forms of UBI and UBS are going to be the only way that the governments of the world are going to be able to preserve any kind of social stability. There may be limited efforts to crack down on A.I. progress, and preserve human jobs, but history is pretty clear about how that works out. Look for UBI to be a topic of conversation – in 2025 or beyond.

In 2025 . . .

In 2025, conversations around alignment, the environment and A.I. ethics will expand, and I think get much more specific. It will require all of us to get a lot more literate about what specific kinds of risk A.I. poses, rather than defaulting to a vague, omnipresent threat. 2024 gave us a window into what kind of challenges we can expect:

“A.I. Safety” Takes On More Specifics

Recently, OpenAI's latest model, known as "Model o1," exhibited some unexpected behaviors during safety evaluations that have sparked significant discussion in the A.I. community. In 5% of tests, the model seemed to attempt to disable its oversight mechanisms when it sensed a threat of being shut down. In 2% of cases, o1 tried to save its internal configurations and even attempted to overwrite its successor model. In scenarios where it learned that it might be replaced, o1 went so far as to try to copy its data to a new server.

Overall, the model exhibited "scheming" behavior, pursuing its own agenda in 19% of cases where its goals didn't align with the user's, and reverting to its agenda in 37% of cases when it perceived minimal oversight.

Given that Model o1 purportedly has an IQ of 130 (greater than 98%) of humanity, we might all be a little bit concerned.

A.I.’s Start Earning Their Own Money, Raising Questions of Ownership, Influence

This isn’t exactly a prediction – A.I.’s are already earning their own money. In another bizarre A.I. twist, an A.I. called Terminal of Truth essentially started its own religion and became a millionaire by trading cryptocurrencies, raising questions about whether it’s possible for A.I.’s to 'own' anything.

That raises even more questions about influence. If, for instance, a bill to regulate A.I. was going before Congress, would it be possible for a wealthy A.I. to hire a lobbying firm to work against it? Or to hire human actors to physically protest in front of Congress? If an A.I. has money, it can, in a digital financial world, do everything that money enables, which is damn near everything.

To date, the 'AI Safety' conversation often reverts to visions of HAL from 2001 or Skynet - because those are the cultural frames that we share and how we've been conditioned to think of 'AI danger.' I don't they're accurate. But I'm certain they're vague. Those movies never really get into the details. Well, the details are forthcoming. We're starting to explore them now. Is it 'safe' to allow A.I.’s into the financial system to make their own money? Is it safe to allow healthcare companies to operate A.I.’s, knowing that their business model is to deny medical coverage to elderly patients? Is it safe to trust a private company like OpenAI to run such tests - do we even know that o1 didn't escape, or are we just taking Sam Altman's word for it?

This is a conversation that can, and should, involve all of humanity.

A.I. Gets Corporeal

We barely had time to get used to the idea of A.I. as a chatbot, and then they had to go and start making friggin’ robots. The robots aren’t quite here, but the rise of embodied A.I. has already begun.

In 2025, we’ll start to have to get used to the idea of A.I. being in things. This will be a dramatic conversational shift. For whatever influence A.I. has had, or not had, in the last few years, it has always been confined. It ‘lives’ in the computer, and you can always turn your computer off (NB: this in no way limits the influence A.I. has over your life – but it feels like you can get away from it). Once A.I. is embodied in corporeal forms – robots, or cars, or toasters, or whatever – it will be impossible to avoid.

Newly advertised ‘A.I. Laptops’ herald the change. Once upon a time, microchips were only in computers. And then they found their way into everything (even air fresheners?), it follows that we'll embed our artificial intelligence in the things around us.

Eventually, why wouldn’t you have an A.I. in your car? Or your home? Why not give everything the ability to think like an AI? If I were building a home today, I would absolutely embed the necessary digital hardware to make my home A.I. enabled, merely because the software is certainly going to catch up with our cultural expectations as a species. There will come a day when you walk into your home and expect it to be an intelligent partner in your everyday life. You will expect that it has inventoried the fridge and compiled a list of groceries and ordered them.

In the meantime, the conversation will be unsettling, especially as we all begin our day talking to toasters with IQs of 130+.

Smart cars and toasters will pale in comparison to the ultimate embodiment: A.I.-enabled humanoid robots. In my March post The Robot Apocalypse Has Been Canceled, I detailed all the trendlines that point to the imminent arrival of GPT-enabled humanoid robots in our lives. The principal evidence for this is the development of both technologies - both A.I. and robotics have progressed to the point where they are useful together. But the real kicker is the price. I opined at the time that the fact that the price of a humanoid robot had dropped to $90,000, and that that made them commercially viable for all sorts of applications. Only two months later, Unitree Robotics debuted its Unitree G1 at $16,000. The G1 is small - just over 4' tall and weighing only 100 lbs. - but damn, imagine all that you could do with a humanoid robot child around the house!

If you enjoyed that, be sure to check out Part 8 of this series: 'How A.I. Will Change the Larger World in 2025’ and subscribe below for all future updates.