AI as a Global Rorschach Test

How What We Think About AI Tells Us Something About Ourselves

I’m calling it now. Any article in the next few years that lends a respectable scientific or academic imprimatur to claims of “AI can’t do X” or “AI won’t take your job” will be met with enthusiastic applause, regardless of its actual merits. Which is in no way to suggest that those articles are merit-less. I think there are and will be legitimate arguments for those claims. But legitimacy is overrated, because AI has effectively become a global Rorschach test – one where we see the future that we want to see.

A psychologist friend once explained to me that Rorschach tests don’t actually mean anything. They’re quite deliberately just vague blotches, designed to allow for infinite interpretation. Because that blot could be a million damn things, your interpretation of any one of those million things tells the tester that you’re probably alright in the head. It’s when you start seeing things that aren’t there that they start fitting the straight jacket (e.g. the blot is speaking to me and telling me that Brutalist architecture is actually beautiful).

Two recent papers blew up my social media feed and discussion boards, and got me thinking about AI as one of those famous Rorschach inkblots. In my feeds, both papers seemed to travel at roughly the same velocity — tweeted, reshared, and quoted with a kind of vindicated exuberance, as if the shell of “AI Hype” had finally been cracked.

While I was writing this, a third paper was streaking across my feeds: “Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task” A paper by MIT researchers that says, basically, ‘working with LLMs makes you stupid.’ It reminded me of all the other things that they claimed made us stupid: heavy metal, television, comic books, jazz, and . . . radio?

I’m only going to focus on the first two papers because, honestly, I use LLMs every day, and if they do in fact make you stupid, then I would be unqualified to evaluate a paper that claimed as much.

The first paper, from researchers at Apple, opined that so-called ‘reasoning’ LLMs weren’t really reasoning at all, and purported to have found the threshold at which they collapse into confusion and nonsense. The second paper, by researchers at Yale, argued that we could parse ‘work’ into smaller, more nuanced categories, and then make estimates about which of those categories might be replaced by AI, thereby giving human workers a roadmap to relevance in an AI future.

It occurred to me that juxtaposing them would make a great post, because the first one was hot garbage, and the second made a fair bit of sense (I didn’t ultimately fully agree with the author’s conclusions, but appreciated the experimental setup and the thoughtfulness of the paper itself).

Paper 1: The Illusion of the Illusion of Thinking

Researchers at Apple recently published The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity, and it was quickly followed by headlines like these:

And

My honest-to-God first reaction was ‘the people who made Siri are lecturing the people who made ChatGPT on how ineffective their AI is?’ After laughing at my own jokes for a while, I took to twitter, in order to laugh at other people’s jokes, but found that people were actually taking the paper quite seriously, at least initially. I was immediately suspicious, though, given that Apple is the last of the major tech companies without an AI strategy. The fact that the paper dropped just ahead of WWDC25 (Apple’s global developer conference) where Apple announced no major AI breakthroughs only reinforced my suspicion that this was a reputational counteroffensive, as opposed to a serious scientific investigation.

A technical critique of the Apple Paper would get pretty boring, so let’s try metaphor:

Suppose you recently hired a new grad, and as a first assignment, you ask them to lay out a plan for a program with 31 rooms of 5 different room types. You give them 1 hour to complete the task. You want to assess their proficiency, so you time their effort, and count the number of mouseclicks it takes them to lay out the plan. That would make you weird, but okay.

They perform well, so the next day you ask them to do another one, this time with 63 rooms and 6 different room types, and you once again measure their time, and their mouseclicks. On Day 3, same thing, but with 127 rooms and 7 room types, whereupon, they finally start to struggle a bit. At this point, you notice they’ve started to make a few errors.

Even with a few errors, laying out a plan with 127 rooms in under an hour might be considered impressive. But you’re an asshole, so on Day 4, you assign them 255 rooms of 8 different types. Being a dutiful new grad, they work on it for a little bit, but very shortly just chuck their mouse across the room and quit.

Being the weird asshole that you are, you write an editorial for an architectural magazine decrying the fact that recent grads don’t know how to program floor plans, and rage-quit when assigned any really ‘hard’ design problem.

See how fucked up that sounds? That’s basically the Apple paper.

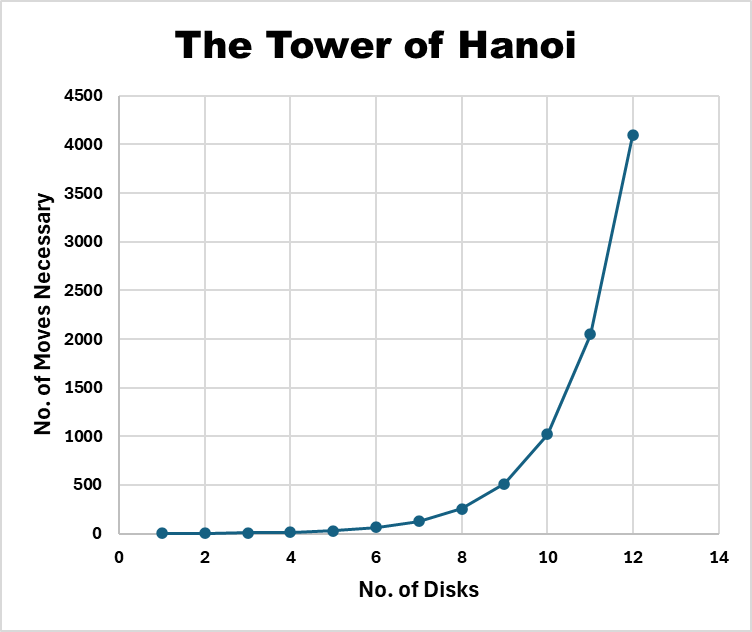

The experiment used games and puzzles to test the ‘reasoning’ of state-of-the-art LLMs (all made by their competitors). The most familiar might be the Tower of Hanoi. It’s basically a series of disks, stacked on one of three pegs, in descending order. The object is to move the tower to another peg, one disk at a time, while ensuring that no disk is ever set on a disk smaller than itself:

The puzzle becomes exponentially more complicated as you increase the number of disks:

In Apple’s experiment, the ‘reasoning’ ability of state-of-the-art LLMs started to breakdown around 7 disks, which would have required a minimum of 127 moves, in precise order, to solve. The LLMs then kinda gave up at 8 disks, which would have required 255 moves, in precise order, to solve.

My Grandpa’s Idiot

I once took the Tower of Hanoi test when I was 8, for some kind of testing or something. It had maybe three disks. I can’t remember how many moves it took me to solve it, but if it had required 255 moves, I’d have quit too. My grandfather used to say, “One idiot can ask more questions in five minutes than ten wise men can answer in twenty years.” He wasn’t talking about AI, but the shoe fits. What’s being proven here, other than that we can keep inventing problems neither humans nor machines want to solve?

We will likely always be able to do so, regardless of how far AI progresses. Just as likely, there will always be humans standing around triumphantly, clucking ‘See? I told you that AI sucks.’

Paper 2: Anatomy (of work) 101

Like I said, the second paper was a lot more interesting. In The Anatomy of Work in the Age of AI, Nisheeth Vishnoi argues that we we should decompose “jobs” into decision-making and execution skills to create a clearer lens on what AI might replace, and what it won’t. I’ve been working on a similar idea, so reading Vishnoi’s paper was both frustrating and validating.

I’ll publish my version shortly, but basically, both Vishnoi and myself pursue the same logic: that it doesn’t really make sense to ask whether Job X is going to be replaced by AI, because Job X will be so profoundly different in the future, that it might as well be Job Y. That doesn’t inherently mean that the person who was doing Job X is out of a job. Nor does it mean that they will automatically segue into Job Y. They might, or they may up doing something completely different. The better question is to ask ‘what skills (and what kinds of skills) will be necessary in the future?’

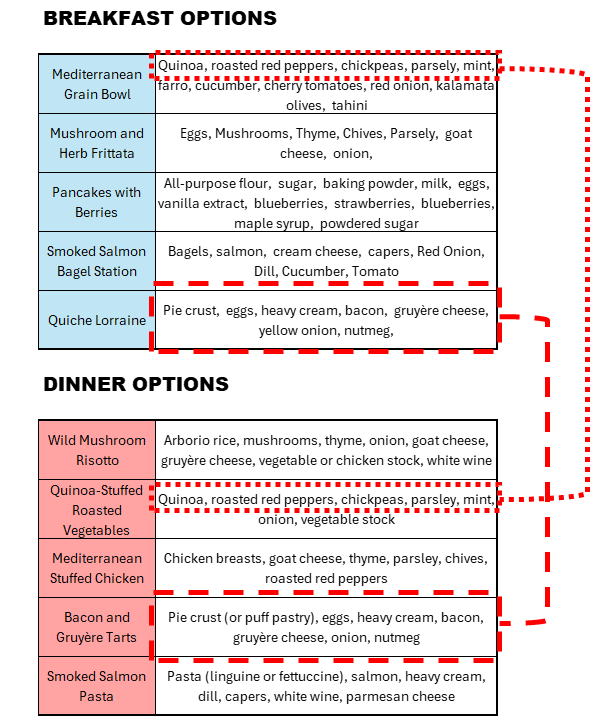

I probably have catering on the brain since returning from a recent wedding, but here’s how I’ve been thinking about it: if you’ve got ingredients for breakfast, but decide to make dinner instead, you don’t need to throw everything out — but you probably don’t keep everything either.

Swap out Quiche Lorraine for Bacon & Gruyere Tarts? Same ingredients, new context. Go from a Mediterranean Grain Bowl to Quinoa-Stuffed Veggies? Mostly reusable, but you'll need a few extras. But if you’re going from pancakes to Smoked Salmon Pasta? That’s a whole new shopping trip.

The point is: understanding what’s reusable — and what isn’t — tells you what to pick up at the store, metaphorically speaking.

Because I am also susceptible to seeing ghosts in the inkblot, when I first read Vishnoi’s Paper, I dismissed it as ‘Human Hype’ – another in a series of “AI Won’t Take Your Job!” reassurance pieces that are written to sooth the anxieties of the author, the audience, or both. But as I read it more closely, I came into full agreement: the whole “AI will/won’t take your job” conversation is kind of a red herring.

However, there were enough rhetorical shortcomings that I started to wonder anew whether it actually was a reassurance piece.

God’s Favored Software Engineer

Vishnoi bundles what is a really math-heavy paper around a central example of ‘Ada’ – a software engineer:

“Consider Ada, a mid-level software engineer. Just a few years ago, her day revolved around writing code, debugging features, documenting functions, and reviewing pull requests. Today, GitHub Copilot writes much of the scaffolding. GPT drafts her documentation. Internal models flag bugs before she sees them. Her technical execution has been accelerated — even outsourced — by AI. But her job hasn’t disappeared. It has shifted.

What Ada does has changed. What Ada is responsible for has not.

What matters now is whether Ada can decide what to build, why it matters, how to navigate tradeoffs that aren’t in the training data — and crucially, whether the result is even correct. Her value no longer lies in execution — it lies in judgment and verification. This isn’t just a shift in what she does — it’s a shift in what makes her valuable. Execution can be delegated. Judgment cannot.” [Emphasis Mine]

The use of a software engineer as a central example seems like an odd choice, since employment among software engineers is noticeably, verifiably, dramatically down.

Ada, as a singular example of a mid-level engineer, may be asked to do less coding and more thinking. But the paper never grapples with how many Adas will transition into such roles — or whether that’s even plausible given firm-level hierarchies, hiring priorities, or labor trends.

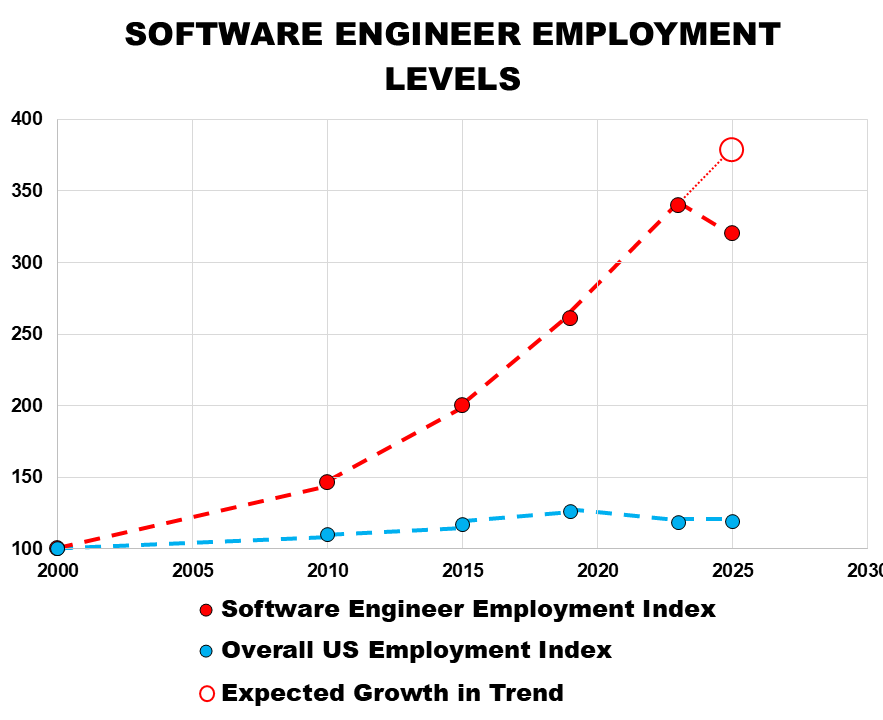

The trends, pointedly, are downward. Taking 2000 overall employment data as a baseline of 100, we’re able to see that over the past 25 years, the software engineering field has been expanding dramatically, relative to overall employment:

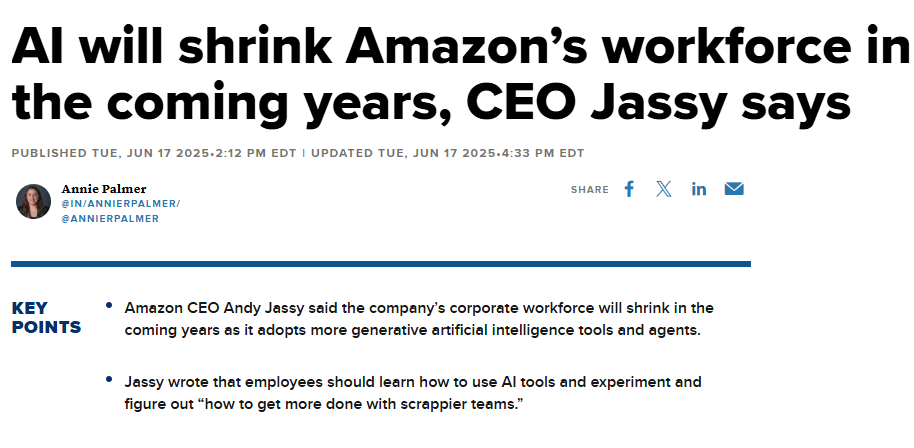

Then, after peaking in 2023 (right after ChatGPT was released), it’s been declining steadily. The most accurate comparison (IMHO) would compare the level of expected software engineering employment (red circle, above) versus its present state. That comparison reveals that roughly 250,000 software engineering jobs have either disappeared or never materialized in the first place. Is all that because of AI? No. Most industry experts also point to contributing factors like post-pandemic over-employment and such. But still, many CEOs are outright transparent about the fact that they’re scaling back because of AI. Most recently, the CEO of Amazon spilled the tea in a leaked memo:

Vishnoi seems like a pretty smart guy trying hard to figure out all this AI stuff, which made it all the more disappointing to see the logical gaps pile up in this piece.

His claim that “execution can be delegated - judgment cannot” seems flatly untrue. I mean, of course judgment can be delegated. If it couldn’t, then a CEO would have to make every decision that’s made at his or her company.

Even if we accept that ‘judgment’ as a moat that AI can’t cross, Vishnoi also skips over an increasingly concerning issue in all professions: how do subsequent generations build judgment when AI assumes the work from which judgment is built? Ada has judgment because she spent all those years writing code, debugging features, and reviewing pull requests. I’m sure she’s relieved to find out that she doesn’t have to do those things anymore, and instead can get compensated for her judgement, instead of her time. But how does she now develop the judgment needed to move on to the next phase of her career, as a Senior Level software engineer? And what does Vishnoi’s model contemplate for entry-level software engineers, who don’t yet have any particular judgment to offer?

Sometimes a Cigar is Just a Cigar

I struggled to understand why Vishnoi (or anyone) would skip over these rather obvious counter-arguments, and the only plausible argument I can imagine for these kinds of omissions is that they don’t matter. What matters is what we see in the inkblot – not what’s actually there. Because nothing is actually there – it’s just an inkblot.

In the therapist’s office, you see a turtle, or mermaid, or whatever in the inkblot because someone in a position of authority asked you to see something. In the vague, inky swirls of the future, we see something, because we need some understanding of the future to manage our anxieties in the present. That’s understandable, as long as we’re mindful about the fact that what we want of the future often becomes what we think the future will be.